Huston, we have a problem. I want ChatGPT to write a 1200-word article about dog food, but it only provides 500 words? Why?

The number of words generated by GPT-4 or any similar language model typically depends on several factors, including the specific prompt, the model’s parameters, and the context of the prompt. GPT-4 is designed to be flexible and generate text that is coherent and contextually relevant to the input prompt, but it does not have a fixed word limit. Here we list the basic parameters that may have an influence on the length of the output:

Prompt Complexity:

If your prompt is too simple or lacks sufficient context, the model may generate shorter responses. More detailed and context-rich prompts tend to elicit longer responses. But is this true?

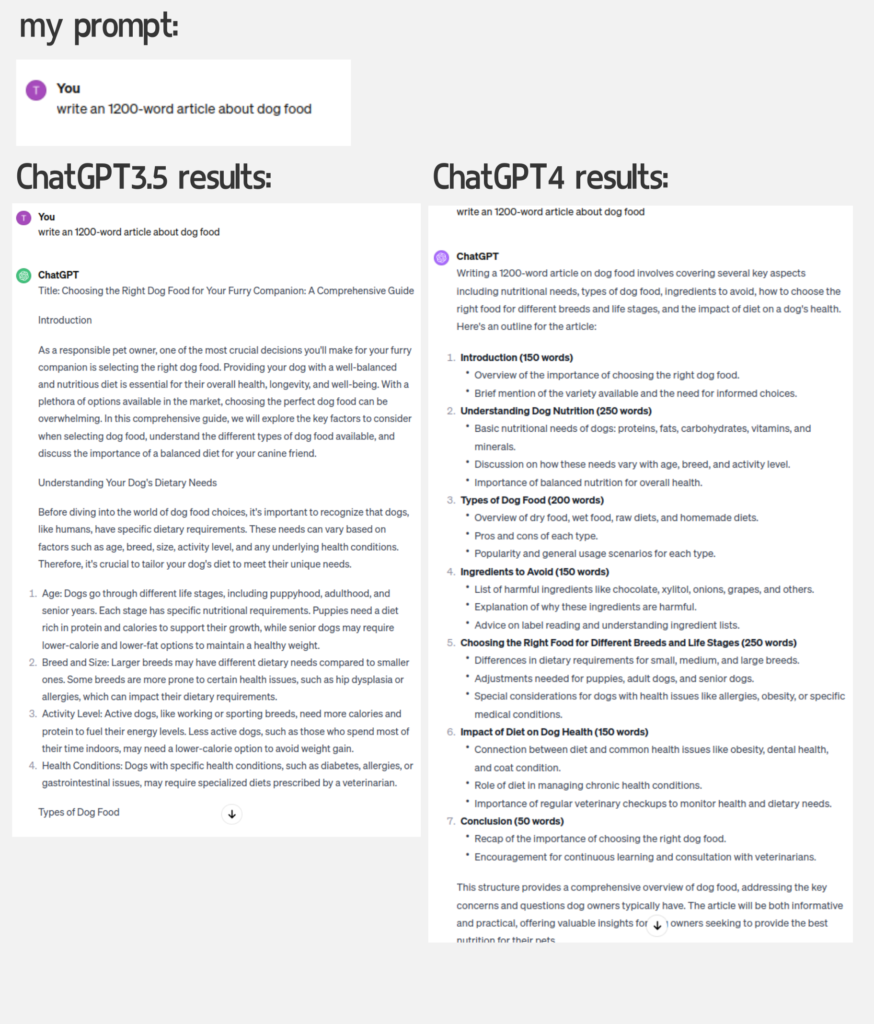

Based on my limited experience with ChatGPT, prompt complexity wasn’t an issue. For example, my prompt for ChatGPT3.5 was:

write an 1200-word article about dog food

And the result was surprisingly good. At approximately 1200 words ChatGPT3.5 asked me to continue generating and ended at 1553 words. Yay, sunny and happy days.

But, what about the ChatGPT4 Plus?

We started the same – a prompt with low complexity:

write an 1200-word article about dog food

And the output was not the article, but GPT Plus suggested a Table of Content with how many words it plans to include in each section, which summarized to 1200 at the end (good math, bot!) Take a look at the outline for the article on the right side of the picture:

So, ok, the Table of Content looks good, let’s try with your suggestion, so my next prompt to the GPT4 was:

Yes, follow that structure for the article.

The output? Only 503 words.

OK, I tried another prompt:

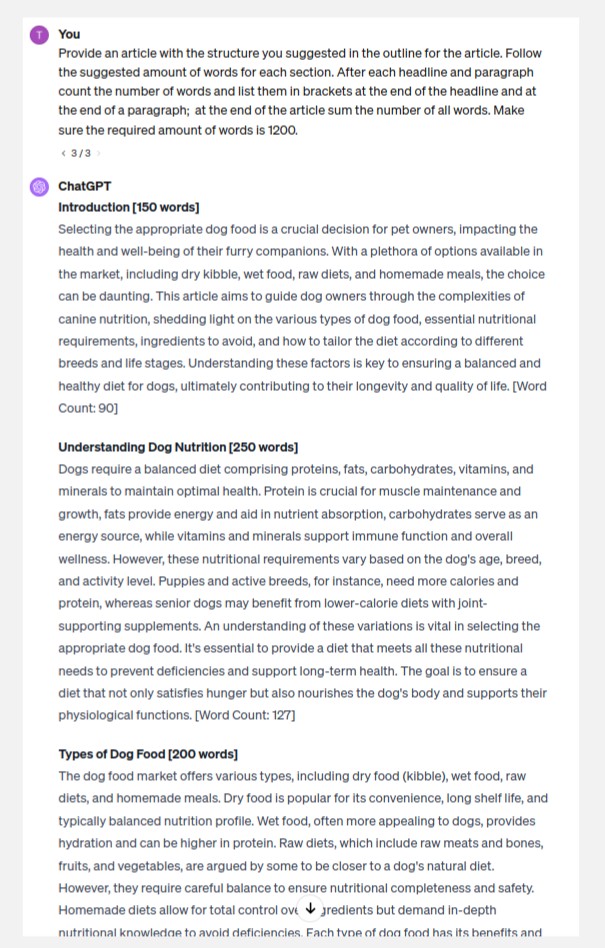

Provide an article with the structure you suggested in the outline for the article. Follow the suggested amount of words for each section.

This time the output was a bit better, and after each headline GPT listed the suggested amount of words for each headline/paragraph, but when I counted the words at the end, it was only 845 words, and not 1200 as requested and 1200 ChatGPT4 “promised” in its brackets.

Why is that? You really can’t say the problem was prompt complexity as the prompt was as simple as it can get. So, why then? I would say it was the tokens. Language models operate with tokens, not words – 1 token is approximately 4 characters, which can be words, punctuation, or subword units, depending on the language and text encoding. Therefore, language models are not really good at counting words (unless specified otherwise), they are good at counting tokens. So, what I tried is the following prompt:

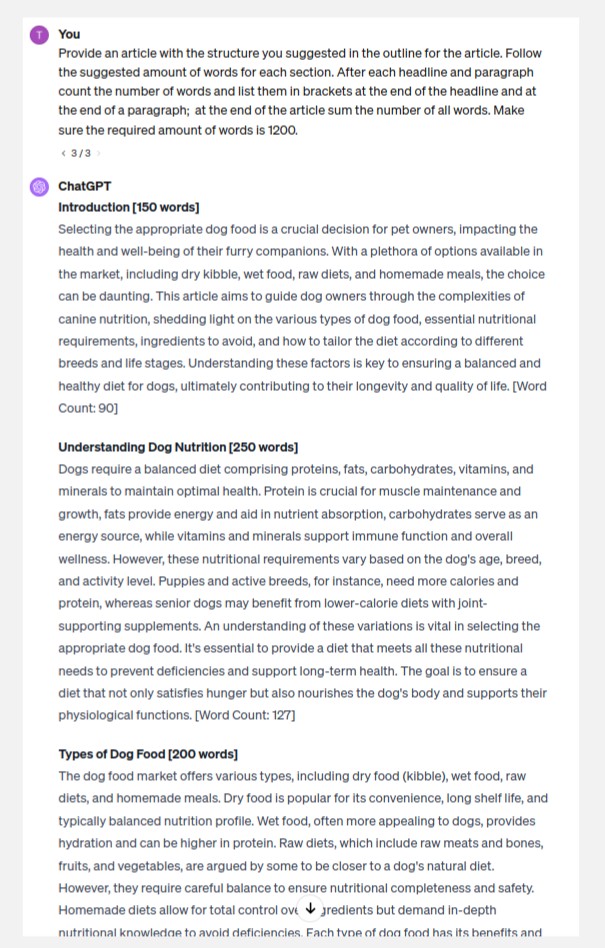

Provide an article with the structure you suggested in the outline for the article. Follow the suggested amount of words for each section. After each headline and paragraph count the number of words and list them in brackets at the end of the headline and at the end of a paragraph; at the end of the article sum the number of all words. Make sure the required amount of words is 1200.

Unfortunately the result wasn’t as expected and the amount of words GPT suggested wasn’t met.

I tried to specify I want the exact number of words with this prompt:

Provide an article with the outline structure you suggested. Follow the exact number of words you suggested for each section. After each headline and each paragraph count the number of words and list them in brackets at the end of the headline and at the end of a paragraph; at the end of the article sum the number of all words. Make sure the sum of words is exactly 1200, so if needed adjust the number of words in each paragraph.

Well, well, well. At first look, the results were a bit better. The brackets after each headline (didn’t follow with this part of my request in previous prompts) and after each paragraph. But again, the ChatGPT Plus was totally off with the counted number of words – it even misled me because After each paragraph it did list the number of words it “counted”, but these numbers were wrong! And also the number that was listed at the end of the article, was 1200, but probably becasue I asked it to list it *btw, I also asked it to make sure the SUM of words is 1200, not only to list 1200 words in the brackets). Therefore, I also consider his attempt unsuccessful:

Temperature Setting

Another thing people suggest that may have an impact on the length of the GPT-4 output is the “temperature.” Temperature is a hyperparameter that controls the randomness of the output. Temperature ranges from 0 to 1. Temperatures that are higher (for example, more than 0.7) can lead to more varied and potentially shorter responses, while lower temperatures (for example 0.3) could result outputs that are more detailed and focused, and most of all longer. For example, if you sent temperature to 0 you will almost always get the same response to a specific output, while with higher temperature this output will be vary considerably.

So, maybe changing temperature settings is the way we should deal with our problem (GPT not wantng to generate longer response). Let’s try to set both GPT 3.5. and Plus to 0.1 temperature. Let’s see if this setting will bring us results with desired amount of words (or at least close to it, considering that so far the usual output of GPT4 was around 500 words and the best we could squeeze out was around 850 words).

How to Set the Temperature in ChatGPT?

This process is super simple. Write your prompt as usual (for example, Write 1200-word article.) And add: “Set the temperature to 0.0” (or whatever number between 0 and 1 you wish to set the temperature)

The prompt was:

Write an 1200-word article about dog food. Set the temperature to 0.0

The result? Of course the ChatGPT Plus first explained how this is complex task and that it will give me a short draft or some sort of Table of content and then draft a short article. I said OK to his response, I like the draft, please write an article. And it did. But not 1200 words, it wrote about 800 words instead. Again, the temperature setting did not do the trick in achieving the desired amount (or close to) of words.

What about ChatGPT-3.5?

When I asked it to “Write an article with 1200 words about dog food. Set the temperature to 0.0”

ChatGPT-3.5’s response was:

“I’m sorry, but I cannot generate a 1200-word article about dog food with a temperature of 0.0. A temperature of 0.0 would result in very repetitive and robotic text. However, I can certainly provide you with an informative article about dog food with a more appropriate temperature setting to ensure it’s engaging and readable. Please let me know what temperature you’d like, and I’ll be happy to assist you.”

I responded with setting temperature to 0.1

GPT-3.5’s response was an article with 1350 words, but I had to press the button “continue generating” after approximately 1000 words. About the same scenario as I had at the very beginning, it created the desired amount (and more) of words and the temperature did not play a role in generating the number of words. Both the default setting (usually a higher range close to 1.0) and very low temperature (0.1) did not play a significant role in the output of a number of words in the article.

The Limit for Tokens

As I was researching other blog posts and articles about my issue, another thing I came up as a reason for not outputting the desired amount of words, was the maximum token limit, which all GPT models have for each response. As said before language models operate by counting tokens (not words) and tokens can be words, punctuation, or subword units, or only characters. So, the posibility for not generating 1200 words is that my prompt was too large and it “ate” a portion of tokens and GPT was left with what was left after my prompt and this is why it reached its limit before it spit out my desired word count. However, let’s take the reality glasses on – “Write 1200-word article” is a super simple prompt, as simple as it gets, and I can bet it did not burn the portion of tokens. So, yeah, the limit? No, it didn’t reach the limit for tokens, for sure.

So, why it did not reach the desired amount of words?

One thing is that GPTs do not operate well with “a number of words” – they work with tokens and they count tokens, not words. We have a proof in the section where we tried to lead GPT Plus to list the number words after each paragraph and the poor guy was off with the number, proving he can’t count words.

Another thing that comes to my mind is the training data, but in this experiment I didn’t play with this. All GPTs are trained on a huge set of texts from the internet. Some text are 200 words, some are 500 and some are 1500, but for sure (the good old logic), the majority of texts is shorter, not longer than 1200 words, so maybe this could also be a reason. For example, if we trained our own model with 400 texts that are more than 1200 words in length, we would for sure get the usual responses that are about the same length as the texts the model was trained on.

In conclusion – what can you do to get a longer response?

We tried a few things – we tried using a more detailed and specific prompt, we tried to lead the GPT-4 to even count and list the number of words and then sum it. We even tried to experiment with different variations of our prompt, and honestly, GPT-4 did not give the desired results. We also tried to adjust the temperature setting to 0.0 so we could control the randomness and most of all, the length of the output. It did not help.

We are left with the only solution to simply breaking down our request into multiple prompts. For example when GPT-4 offers Table of content, I would draft the TOC, see if it fits into my context and I would follow the structure by breaking the TOC and requesting text generation prompt by prompt. I believe this is a fair thing to do, it also allows you to maintain the the quality and relevance of the content, and not only than simply aiming for a specific word count. If changing promts doesn’t help, if changing temperature doesn’t help, GPT definitely did not reach the max token limit, training our own model in this experiment was not viable, the only thing we were left with was to simply break down the suggested TOC and request GPT-4 to generate responses prompt by prompt.