What is a style transfer?

Style transfer is meant as the process of applying the style of one image onto the content of another. For example, you upload or create a picture of a cat in Stable Diffusion and then through a image-to-image option transfer that image into a specific style by updating the prompt, and for example adding line art, sketch art, or impressionist style, surrealistic style or whatever artistic style you decide is the best.

Application of style transfer has become very popular and most of all a fascinating application of artificial intelligence. In this story, Stable Diffusion has emerged as a powerful and versatile option, capable of generating high-quality, stylized images through a process known as image-to-image translation. In this article, we explore how to achieve style transfer using Stable Diffusion, highlighting key methods and providing examples to guide you through the process.

As you already know, Stable Diffusion is an AI model that generates images from textual descriptions. It is built on the principle of latent diffusion models, which through iterations (from that latent space in the background), refine noise into detailed images. Primarily Stable Diffusion was known as a text-to-image generation, however in this case, when dealing with style transfer, Stable Diffusion can also perform image-to-image translations.

Methods for Style Transfer with Stable Diffusion

Working with Direct Textual Prompts

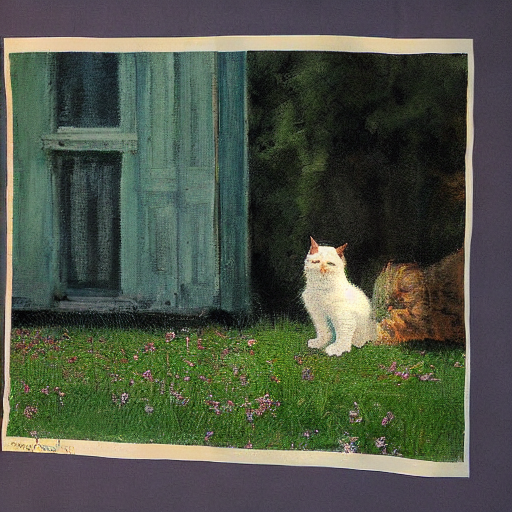

Direct textual prompts are one of the simplest ways to achieve a specific art style within Stable Diffusion. What does that mean? You simply specify the content of the image (for example a cat) and the desired style within the text prompt (for example ‘apply the style of Monet to a photograph of a cat). So, at the end, working with text-to-image the prompt could be: “a cat photo in the style of Monet.” Let’s see what Stable Diffusion created:

Not bad. We have a cat, and we have a Monet style.

Embedding Style in the Initial Image

Another, but slightly more complex, approach is to incorporate the style directly into the initial image by blending a content image with a style image before feeding it into Stable Diffusion. This method often involves pre-processing steps using external tools to create an image that merges both the content’s details and the style’s texture and colors. Much more knowledge is demanded with this technique and a lot more work. If you’re a beginner in visual media, we recommend taking the first approach.

Using Style-Specific Models

And of course here are the variants of the model fine-tuned for specific styles or artists. These specialized models have been trained with additional data to capture the nuances of particular artistic styles, making them highly effective for targeted style transfers. For example, a few months ago, Cornell University published a project where authors aimed to transfer style to Calvin and Hobbes comics using Stable Diffusion. So, this project report summarizes the journey of authors to perform stable diffusion fine-tuning on a dataset containing Calvin and Hobbes comics. The end result of the project was to convert any given input image into the comic style of Calvin and Hobbes. Which basically what style transfer is all about. The authors trained stable-diffusion-v1.5 using Low Rank Adaptation (LoRA) to efficiently speed up the fine-tuning process, and the diffusion itself is handled by a Variational Autoencoder (VAE). Again, there is specific knowledge in programming demanded. First thing you have to know is some basic Linux, at least some basic commands, as well as knowledge in Phyton. However, if you are familiar with basic programming in for example php or JavaScript, we believe you will be able to get through the code and change specific hyperparameters. With training data, there are some specifics you should be aware of, such as desired or recommended number of items in training sets, updated (or not) libraries, recommended number of epohas and some others. Anyways, fine tuning is an interesting approach to changing styles, however, for that purpose only, we don’t recommend it unless you’re interesting in constantly changing images into desired style, such as a project mentioned earlier, where authors changed all images into Calvin and Hobbes comics style.

Changing style with image-to-image prompting and denoising strenght

Let’s take the Monet cat from the first example. And take it to image-to-image option and then update the prompt to, let’s say: “a cat photo in the style of line art”. In the first example we kept the denoising strength to 0.5, while with the other attempt we increased denoising strength up to 1. With the 0.5 the concept was more or less the same – the art of the lines was added to the image, while with the denoising strength of 1, the initial concept the image was totally changed – from the cat on the grass with the green door in the background, we got a profile picture of a cat in black and white with a line art style.

- prompt: a cat photo in the style of Monet

- a cat photo in the style of line art; denoising strength 0.5

- a cat photo in the style of line art; denoising strength 1

ControlNet Enhances Precision in Style Transfer

ControlNet is a neural network architecture designed to offer more control over the image generation process, particularly in tasks like style transfer. ControlNet operates by allowing users to specify control signals or masks that guide the model in applying different styles or content modifications to specific regions of an image. This approach enables a more nuanced application of styles, ensuring that the artistic effects are applied precisely where you want them to be.

For example, we took the Monet cat and then through Unified canvas we masked the part of the door on the right (the part you can see that changed) and asked Stable Diffusion through prompting to change the color of the door to white. Here’s the result:

- a cat photo in the style of line art; denoising strength 0.5

- change color of the door to white

It’s normal the doors aren’t totally white because Stable Diffusion is trying to blend the new demands *white door) into initial image. We believe with additional iterational refining we would be able to achieve the desired white doors.

Basically, what you need to do in this process is to prepare the control signals or masks that define different regions of the image you wish to modify because these masks can specify areas where a particular style should be applied or regions that should remain unchanged – in our case, we wanted to change the color of the doors, so we masked the right part of the doors.

And then the next step is to specify style and content through prompting aka textual descriptions, but you can also use reference images, or even a combination of both. You can specify different styles for different regions of the image as defined by the control signals.

So, before you generate the image, you configure ControlNet with the prepared control signals and style descriptions. In other words, you work on model configuration as this setup informs the model about how and where to apply the specified styles.

Other Advanced Techniques in Style Transfer

In addition to tweaking ControlNet, other advanced techniques have been developed to refine the style transfer process and these include:

Fine-tuning Stable Diffusion Models: We’ve mentioned this technique before, but as it is de facto advanced, we will say it again. If you decide to custom train or fine-tune Stable Diffusion models on specific styles or artworks, this will for sure bring you desired results and will lead to more authentic style replications. As already mentioned, this process of fine tuning involves training the model with a curated dataset of images that exemplify the target style, and enhance the model’s ability to generate images in that style. You will have to bring in some basic programming knowledge.

Latent Space Manipulation: This is an interesting approach and involves manipulating the latent space representations of images. The latent space consists of entirely noisy images, which undergo a gradual transformation via an iterative denoising proces – basically it is a lower-dimensional space in which the high-dimensional data is embedded and pulled out when generated. So, you will have to learn to adjust the vectors in the latent space that correspond to style and content, and then you can achieve unique style transformations that maintain the content’s integrity, but significantly alter its appearance. Not for beginners, that’s for sure.

Iterative Refinement: With this technique you will generate an image, evaluate the style application, and then use the output as an input for further refinement. Basically, you’re refining the image iterations, and this iterative process will allow you to adjust to the style incrementally. The final result will be a more polished final image.

Conclusion

In conclusion, style transfer represents a fascinating intersection between art and artificial intelligence, offering a unique way to blend different visual style or more of them in a seamless and creative manner. As illustrated throughout this content, Stable Diffusion stands out as a versatile and powerful tool for achieving such transformations, providing users with a range of methods to explore and experiment with. From direct textual prompts and embedding style directly into the initial image, to utilizing style-specific models and advanced techniques like ControlNet, the possibilities are vast and varied.

The journey of style transfer with Stable Diffusion is one of exploration and creativity, where the only limits are defined by the imagination of the user. Whether you’re a beginner looking to dip your toes into the world of AI-driven art or a seasoned programmer keen on fine-tuning models for highly specific artistic outputs, the technology offers something for everyone. The examples and methods discussed not only showcase the potential of Stable Diffusion in transforming images but also highlight the broader capabilities of AI in enhancing and redefining the creative process.

As we continue to push the boundaries of what’s possible with AI and art, style transfer with Stable Diffusion serves as a testament to the ongoing evolution of these fields. It’s an exciting time for creators, artists, and technologists alike, as they harness the power of artificial intelligence to bring new visions to life, challenge traditional artistic paradigms, and explore the endless possibilities of digital creativity.